Iiinteresting. I’m on the larger AB350-Gaming 3 and it’s got REV: 1.0 printed on it. No problems with the 5950X so far. 🤐 Either sheer luck or there could have been updated units before they officially changed the rev marking.

Iiinteresting. I’m on the larger AB350-Gaming 3 and it’s got REV: 1.0 printed on it. No problems with the 5950X so far. 🤐 Either sheer luck or there could have been updated units before they officially changed the rev marking.

On paper it should support it. I’m assuming it’s the ASRock AB350M. With a certain BIOS version of course. What’s wrong with it?

B350 isn’t a very fast chipset to begin with

For sure.

I’m willing to bet the CPU in such a motherboard isn’t exactly current-gen either.

Reasonable bet, but it’s a Ryzen 9 5950X with 64GB of RAM. I’m pretty proud of how far I’ve managed to stretch this board. 😆 At this point I’m waiting for blown caps, but the case temp is pretty low so it may end up trucking along for surprisingly long time.

Are you sure you’re even running at PCIe 3.0 speeds too?

So given the CPU, it should be PCIe 3.0, but that doesn’t remove any of the queues/scheduling suspicions for the chipset.

I’m now replicating data out of this pool and the read load looks perfectly balanced. Bandwidth’s fine too. I think I have no choice but to benchmark the disks individually outside of ZFS once I’m done with this operation in order to figure out whether any show problems. If not, they’ll go in the spares bin.

I put the low IOPS disk in a good USB 3 enclosure, hooked to an on-CPU USB controller. Now things are flipped:

capacity operations bandwidth

pool alloc free read write read write

------------------------------------ ----- ----- ----- ----- ----- -----

storage-volume-backup 12.6T 3.74T 0 563 0 293M

mirror-0 12.6T 3.74T 0 563 0 293M

wwn-0x5000c500e8736faf - - 0 406 0 146M

wwn-0x5000c500e8737337 - - 0 156 0 146M

You might be right about the link problem.

Looking at the B350 diagram, the whole chipset is hooked via PCIe 3.0 x4 link to the CPU. The other pool (the source) is hooked via USB controller on the chipset. The SATA controller is also on the chipset so it also shares the chipset-CPU link. I’m pretty sure I’m also using all the PCIe links the chipset provides for SSDs. So that’s 4GB/s total for the whole chipset. Now I’m probably not saturating the whole link, in this particular workload, but perhaps there’s might be another related bottleneck.

Turns out the on-CPU SATA controller isn’t available when the NVMe slot is used. 🫢 Swapped SATA ports, no diff. Put the low IOPS disk in a good USB 3 enclosure, hooked to an on-CPU USB controller. Now things are flipped:

capacity operations bandwidth

pool alloc free read write read write

------------------------------------ ----- ----- ----- ----- ----- -----

storage-volume-backup 12.6T 3.74T 0 563 0 293M

mirror-0 12.6T 3.74T 0 563 0 293M

wwn-0x5000c500e8736faf - - 0 406 0 146M

wwn-0x5000c500e8737337 - - 0 156 0 146M

Interesting. SMART looks pristine on both drives. Brand new drives - Exos X22. Doesn’t mean there isn’t an impending problem of course. I might try shuffling the links to see if that changes the behaviour on the suggestions of the other comment. Both are currently hooked to an AMD B350 chipset SATA controller. There are two ports that should be hooked to the on-CPU SATA controller. I imagine the two SATA controllers don’t share bandwidth. I’ll try putting one disk on the on-CPU controller.

Don’t let be called a hypocrite - give $5. 😆

And now it no longer shows up.

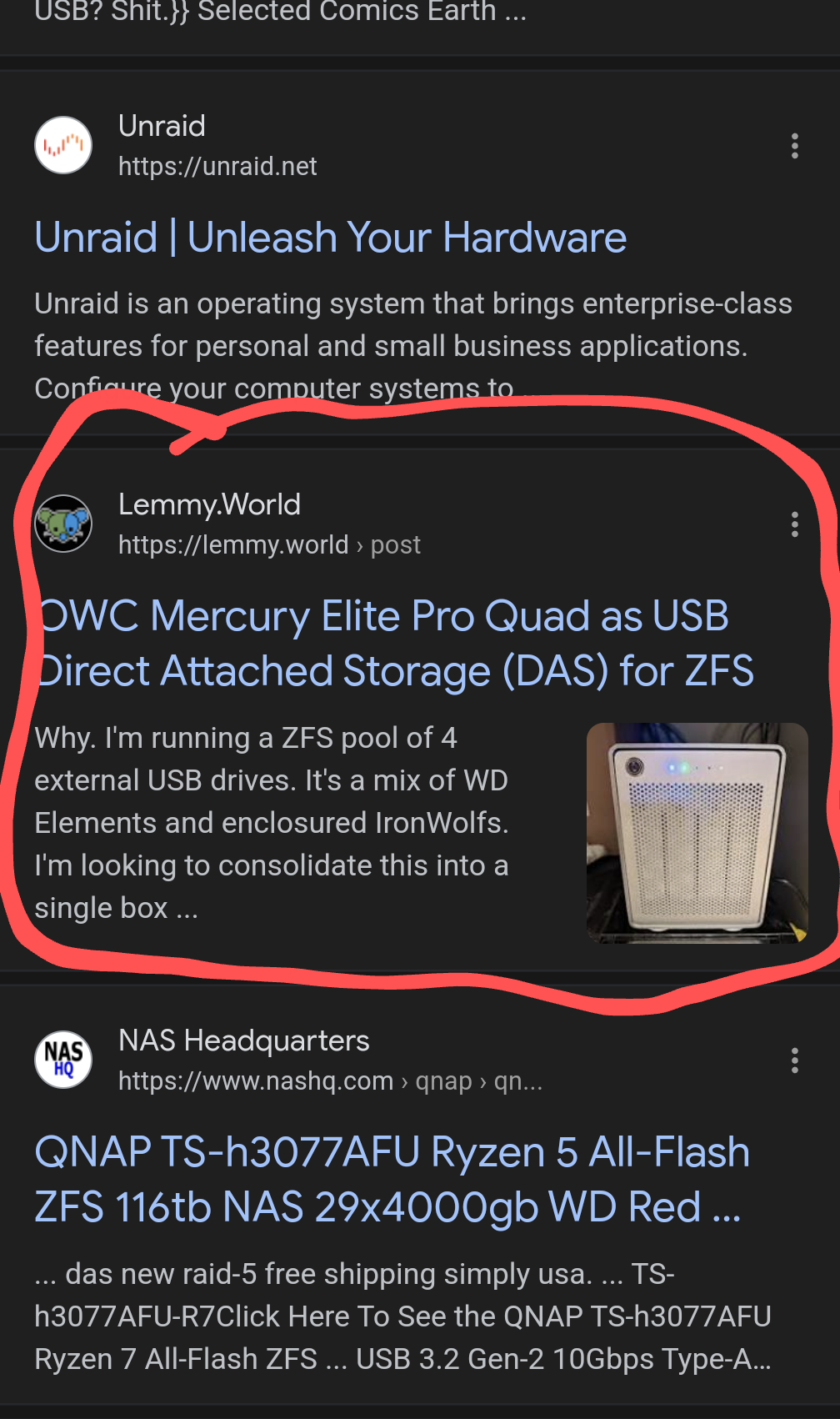

I was wondering what would be better for discoverability, to write this in a blog post, on GitHub, then link it here, or to just write it here. Turns out Google’s crawling Lemmy quite actively. This shows up within the first 10-15 results for “USB DAS ZFS”:

It appears that Lemmy is already a good place for writing stuff like this. ☺️

5950X, 64GB

It’s a multipurpose machine, desktop workstation, games, running various servers.

I think the board has reached the end of the road. 😅