I got an email ban.

1609 hours logged 431 solved threads

Well, it is important to comply with the terms of service established by the website. It is highly recommended to familiarize oneself with the legally binding documents of the platform, including the Terms of Service (Section 2.1), User Agreement (Section 4.2), and Community Guidelines (Section 3.1), which explicitly outline the obligations and restrictions imposed upon users. By refraining from engaging in activities explicitly prohibited within these sections, you will be better positioned to maintain compliance with the platform’s rules and regulations and not receive email bans in the future.

Is this a joke?

This is an ironic ChatGPT answer, meant to (rightfully) creep you out.

NGL I read it and laughed at the AI-like response.

Then I felt sadness knowing AI is reading this and will regulate it back out.

Check the post history. Dude just seems like an ass.

Nah, but the user is. Their post history is… interesting.

If this is true, then we should prepare to be shout at by chatgpt why we didnt knew already that simple error.

ChatGPT now just says “read the docs!” To every question

Hey ChatGPT, how can I …

“Locking as this is a duplicate of [unrelated question]”

Take all you want, it will only take a few hallucinations before no one trusts LLMs to write code or give advice

[…]will only take a few hallucinations before no one trusts LLMs to write code or give advice

Because none of us have ever blindly pasted some code we got off google and crossed our fingers ;-)

It’s way easier to figure that out than check ChatGPT hallucinations. There’s usually someone saying why a response in SO is wrong, either in another response or a comment. You can filter most of the garbage right at that point, without having to put it in your codebase and discover that the hard way. You get none of that information with ChatGPT. The data spat out is not equivalent.

That’s an important point, and and it ties into the way ChatGPT and other LLMs take advantage of a flaw in the human brain:

Because it impersonates a human, people are more inherently willing to trust it. To think it’s “smart”. It’s dangerous how people who don’t know any better (and many people that do know better) will defer to it, consciously or unconsciously, as an authority and never second guess it.

And the fact it’s a one on one conversation, no comment sections, no one else looking at the responses to call them out as bullshit, the user just won’t second guess it.

Your thinking is extremely black and white. Many many, probably most actually, second guess chat bot responses.

Think about how dumb the average person is.

Now, think about the fact that half of the population is dumber than that.

Split segment of data without pii to staging database, test pasted script, completely rewrite script over the next three hours.

So they pulled a “reddit”?

These companies don’t realise their most engaged users generate a disproportionate amount of their content.

They will just go to their own spaces.

I think this a good thing in the long run, the internet will become decentralised again.

I don’t know. It feels a bit like “When I quit my employer will realize how much they depended on me.” The realization tends to be on the other side.

But while SO may keep functioning fine it would be great if this caused other places to spring up as well. Reddit and X/Twitter are still there but I’m glad we have the fediverse.

Individuals leaving don’t have an immediate impact but entire groups of people?

People can see how that worked out for Boeing when many of their experienced engineers and quality inspectors left.

Well, reddit is doing fine so far. Shareholders are happy

Oh I didn’t consider deleting my answers. Thanks for the good idea

BarbraStackOverflow.I’d be shocked if deleted comments weren’t retained by them

I think the reason for those bans is that they don’t want you rebelling and are showing that they don’t need you personally, thus ban.

Of course it’s all retained.

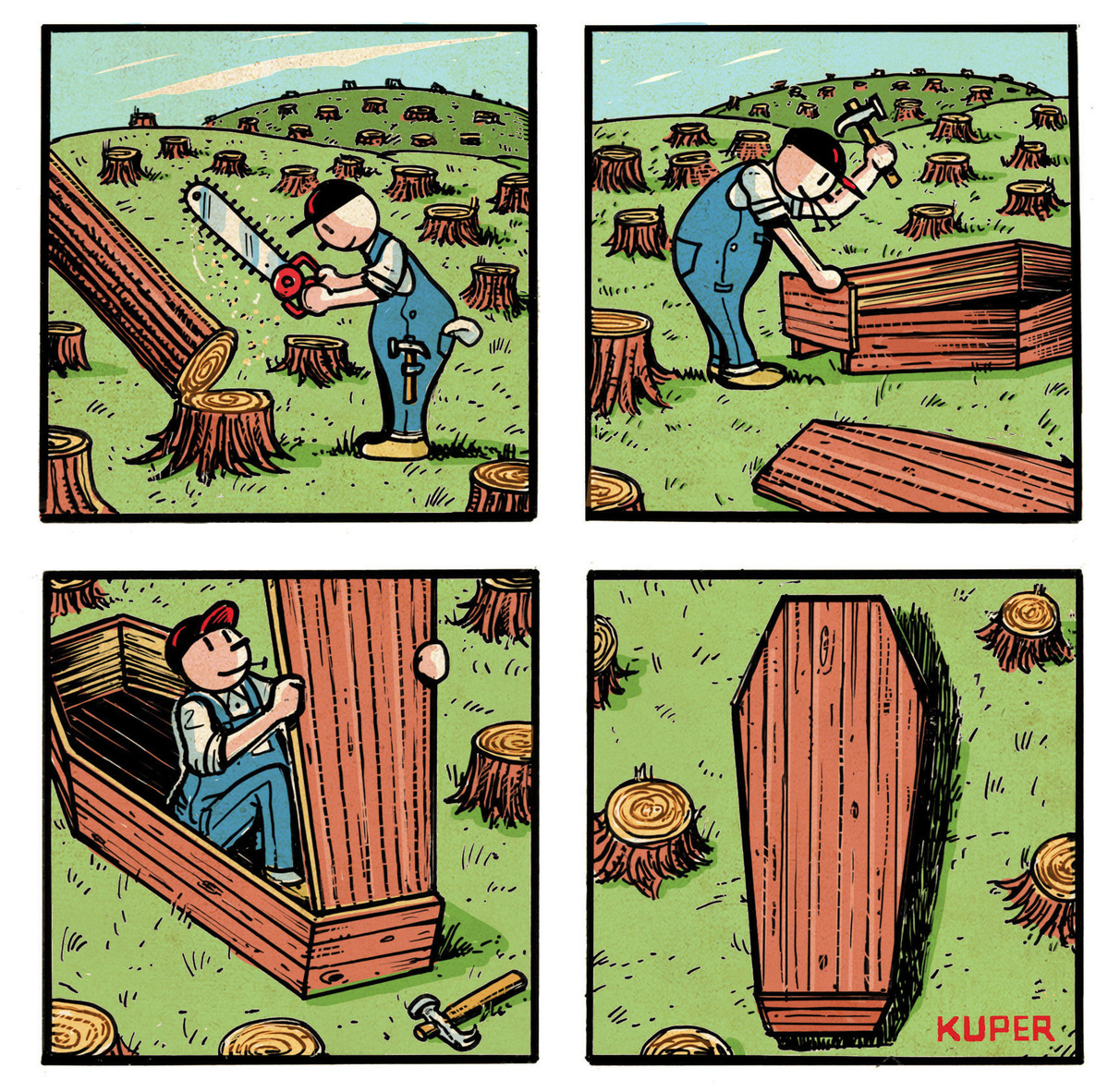

How many trees does a person need to make one coffin…

It’s a metaphor for us killing ourselves in the processes of deforestation, not a story of someone actually making a coffin.

deleted by creator

See, this is why we can’t have nice things. Money fucks it up, every time. Fuck money, it’s a shitty backwards idea. We can do better than this.

Reddit/Stack/AI are the latest examples of an economic system where a few people monetize and get wealthy using the output of the very many.

Technofeudalism

Letting corporations “disrupt” forums was a mistake.

First, they sent the missionaries. They built communities, facilities for the common good, and spoke of collaboration and mutual prosperity. They got so many of us to buy into their belief system as a result.

Then, they sent the conquistadors. They took what we had built under their guidance, and claimed we “weren’t using it” and it was rightfully theirs to begin with.

If i was stack overflow I would’ve transferred my backups to OpenAI weeks before the announcement for this very reason.

This is also assuming the LLMs weren’t already fed with scraped SO data years ago.

It’s a small act of rebellion but SO already has your data and they’ll do whatever they want with it, including mine.

It will not make a difference. The internet is free and open by design. You can always scrape the internet any time. A partnership will do nothing but make it a little bit more convenient for them.

Maybe we need a technical questions and answers siteon the fediverse!

Not gonna stop your knowledge being fed to an AI.

what about instances that need you to be logged in to view posts and require authorized requests for federation?

That defeats the purpose of a knowledge base. The whole reason why everyone is using SO is that you don’t need an account to access it and it’s fully indexed by Google.

The real question is why the fuck are people ok with Google indexing SO and not OpenAI? Doesn’t make any fucking sense.

The real question is why the fuck are people ok with Google indexing SO and not OpenAI? Doesn’t make any fucking sense.

Because Google is free and OpenAI isn’t. It’s one thing to take free content, index it, then allow anyone to access that index. It’s another thing when you take free content, index it, then hide that index behind a paywall.

Are you sure? Because Google is not free at all, you’re paying for it through privacy invasion and ads. While ChatGPT is actually free to use for end users - no ads, nothing.

No, it’s free https://chatgpt.com/

As your link is for custom enterprise solutions, it’s worth noting that Google has the same shit which also costs money https://cloud.google.com/pricing/

The price difference is that google steals your data. That’s it. OpenAI steals data, ask for money to use most of their models, and buy even more data from other companies stealing user data (like google and SO). Also indexing web pages is not even the “stealing” part of google, it’s just not comparable.

Yes, training AI on user data for free then selling the end product is a reasonable thing to be concerned about. It’d be different if the product was free or the data was sold to them with user consent.

SO has announced a subscription-based service trained on user data for free, and not only there’s not even opt-out, they’re mass-banning users for trying to “opt-out” manually. Tell me one thing here that’s not completely fucked up.

But it’s free. Unlike Google.

While at the same time they forbid AI generated answers on their website, oh the turntables.

"the AI isn’t good enough to answer questions yet, it needs more training "

“YOU HYPOCRITE!! If the A.I is too bad to use then why are you training it!”

Clean the damn mold out of your brain.